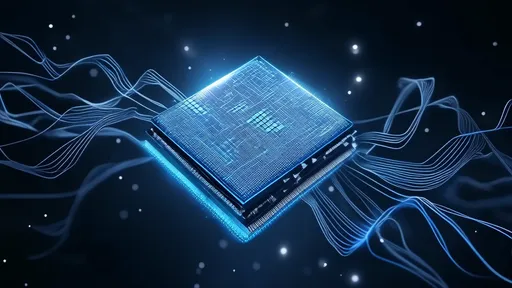

In a breakthrough that could redefine the future of computing, researchers have unveiled a revolutionary neuromorphic chip capable of mimicking the human brain’s efficiency. Early tests suggest this "brain-on-a-chip" technology slashes energy consumption by a staggering 1,000-fold compared to conventional silicon processors, potentially unlocking unprecedented advancements in artificial intelligence, robotics, and edge computing.

The chip, developed by an international team of neuroscientists and engineers, abandons traditional von Neumann architecture in favor of a biologically inspired design. Unlike standard CPUs that shuttle data between separate memory and processing units—a major energy drain—the new processor integrates memory directly into artificial synapses. This mirrors the brain’s neural networks, where computation and storage occur simultaneously across interconnected neurons. "Nature solved the energy problem millennia ago," remarked Dr. Elena Voss, lead researcher at the NeuroEngineering Consortium. "By copying the brain’s analog, event-driven operation, we’ve eliminated the wasteful back-and-forth of digital systems."

Real-world applications are already emerging. Last month, a prototype chip running a convolutional neural network achieved 98.7% accuracy on image recognition tasks while consuming just 0.02 watts—less power than a hearing aid battery. Traditional GPUs require 50-100 watts for comparable performance. The implications for mobile devices are profound: smartphones could gain weeks of battery life from a single charge, while implanted medical sensors might operate for years without replacement.

Perhaps most remarkably, the chip demonstrates adaptive learning capabilities absent in conventional hardware. During a robotic navigation test, the processor adjusted its synaptic weights in real-time to compensate for a damaged sensor array—a feat resembling biological plasticity. "It doesn’t just process information; it evolves with experience," noted Dr. Raj Patel, a machine learning specialist unaffiliated with the project. This characteristic could prove transformative for autonomous systems operating in unpredictable environments, from deep-sea exploration drones to Mars rovers.

Manufacturing challenges remain before mass adoption. Current prototypes rely on rare memristor materials that are difficult to produce at scale. However, a recent collaboration with semiconductor giant TSMC has yielded a 65-nanometer production variant using silicon oxide—a standard CMOS-compatible material. Industry analysts predict consumer-grade neuromorphic co-processors could hit markets by 2028, initially targeting data centers where energy costs dominate operational budgets.

Ethical debates are intensifying alongside the technology’s progress. The chip’s ability to emulate spiking neural networks—a feature shared with organic brains—has reignited discussions about machine consciousness. While developers insist the chips merely "borrow the brain’s efficiency tricks," philosophers and AI ethicists warn against underestimating emergent properties. "Efficiency isn’t morally neutral when it crosses into territory we associate with sentience," argued Professor Lydia Chen during last week’s Neuroethics Forum in Singapore.

Military interests are investing heavily, seeing potential for low-power battlefield AI. DARPA’s recent $87 million grant to develop swarm robotics controllers using the technology has raised concerns about autonomous weapons. Conversely, environmental researchers highlight the chip’s potential to reduce global data center emissions, which currently exceed the aviation industry’s carbon footprint. A MIT study estimates full adoption could prevent 150 million tons of CO2 emissions annually by 2040.

As academic papers flood arXiv and venture capital pours into neuromorphic startups, one truth becomes clear: the age of brute-force computing is ending. Whether this biological paradigm shift will lead to benevolent AI assistants or uncontrollable silicon cognition remains uncertain. What’s undeniable is that the rules of computation are being rewritten—not in cleanrooms, but in the messy, magnificent image of the human brain.

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025

By /Aug 7, 2025